| import pandas as pd |

| import numpy as np |

| import matplotlib.pyplot as plt |

| from matplotlib.font_manager import FontProperties |

| from sklearn.preprocessing import PolynomialFeatures |

| from sklearn.linear_model import LinearRegression |

| from sklearn.metrics import r2_score |

| %matplotlib inline |

| font = FontProperties(fname='/Library/Fonts/Heiti.ttc') |

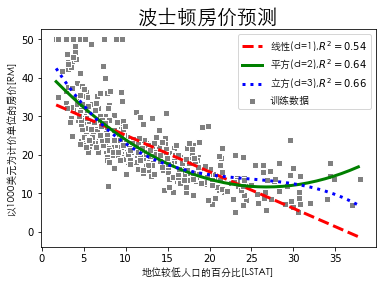

在《代码-普通线性回归》的时候说到特征LSTAT和标记MEDV有最高的相关性,但是它们之间并不是线性关系,因此这次尝试使用多项式回归拟合它们之间的关系。

| df = pd.read_csv('housing-data.txt', sep='\s+', header=0) |

| X = df[['LSTAT']].values |

| y = df['MEDV'].values |

| |

| quadratic = PolynomialFeatures(degree=2) |

| |

| cubic = PolynomialFeatures(degree=3) |

| |

| X_quad = quadratic.fit_transform(X) |

| X_cubic = cubic.fit_transform(X) |

| |

| |

| X_fit = np.arange(X.min(), X.max(), 1)[:, np.newaxis] |

| |

| lr = LinearRegression() |

| |

| |

| lr.fit(X, y) |

| lr_predict = lr.predict(X_fit) |

| |

| lr_r2 = r2_score(y, lr.predict(X)) |

| |

| |

| lr = lr.fit(X_quad, y) |

| quad_predict = lr.predict(quadratic.fit_transform(X_fit)) |

| |

| quadratic_r2 = r2_score(y, lr.predict(X_quad)) |

| |

| |

| lr = lr.fit(X_cubic, y) |

| cubic_predict = lr.predict(cubic.fit_transform(X_fit)) |

| |

| cubic_r2 = r2_score(y, lr.predict(X_cubic)) |

| print(lr.score(X_cubic, y)) |

| print(cubic_r2) |

| 0.6578476405895719 |

| 0.6578476405895719 |